In an evolving digital landscape where cybersecurity and web performance intersect, service providers like Cloudflare play a crucial role in protecting web applications from malicious traffic. However, in the quest to eliminate bots, well-meaning tools can sometimes go too far. This is exactly what happened when Cloudflare’s Bot Fight Mode began blocking legitimate API crawlers, leading to disrupted indexing and denied access for real users and search engines.

TLDR (Too Long; Didn’t Read)

Cloudflare’s Bot Fight Mode, designed to block malicious bots, inadvertently disrupted access to critical API crawlers such as those used by Googlebot, Bingbot, and other indexing services. As a result, sites relying on Cloudflare’s firewall began noticing sudden drops in indexed pages and API errors. The issue was resolved by creating targeted firewall exceptions to allow only verified bots. This experience underscores the need for a balance between security and accessibility.

Understanding Bot Fight Mode

Bot Fight Mode is a feature introduced by Cloudflare to identify and challenge traffic that resembles automated, scripted access. When activated, it detects characteristics common in malicious bots—like rapid repeated requests, non-standard user-agents, and IPs with suspicious reputations—and either blocks or challenges them using JavaScript computation and CAPTCHA mechanisms.

This feature is often popular among website administrators hoping to reduce scraping, spam, and brute-force attacks. Its default behavior aggressively targets unknown bots, a practice that works well in many cases. However, when implemented without proper configuration, Bot Fight Mode may become too broad in its targeting.

What Went Wrong: Blocking Legitimate API Crawlers

Several site operators began noticing what initially seemed like innocuous performance issues. Common symptoms included:

- Sudden drops in daily traffic from search engines

- Missing pages from Google Search Console’s index status

- Repeated 403 and 503 errors in server logs for requests from known bots

- API partners claiming their integrations were failing unexpectedly

Upon investigation, the culprit was narrowed down to Bot Fight Mode. When enabled, it frequently flagged requests from legitimate bots—including:

- Googlebot – Google’s primary indexing crawler

- Bingbot – Microsoft’s web crawler for Bing Search

- AhrefsBot, SemrushBot – Tools used for SEO analysis

- Partner Integration Bots – API consumers from legitimate third-party services

Cloudflare’s documentation does indicate that Bot Fight Mode can interfere with “good bots,” but the extent of disruption in some cases went far beyond expectations—resulting in temporarily lost visibility on major search engines.

The Consequences of Overblocking

The unintended consequences of overblocking crawlers are significant. For sites that rely on organic traffic, search indexing is their lifeline. Blocking Googlebot for even a few days can result in strategic visibility losses that take months to correct.

Similarly, businesses with public APIs often rely on approved external crawlers to aggregate or monitor content. When legitimate APIs fail to respond due to firewall or bot detection tools, partnerships are strained and integrations break.

These are more than just temporary annoyances; these issues can lead to:

- Revenue decline from lost search engine visibility

- Broken APIs for paying partners

- Damaged reputation due to non-functional integrations

- Sudden increase in customer support tickets related to API failures

Firewall Exceptions: The Solution That Worked

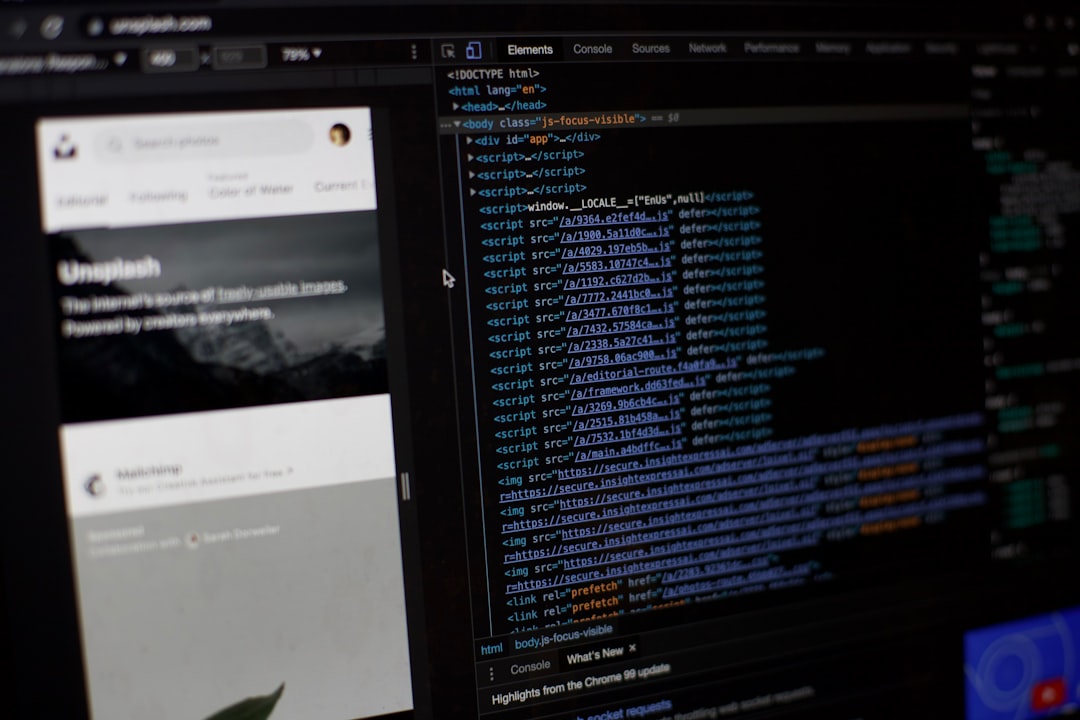

The solution came through Cloudflare’s Firewall Rules and Tools section, which allow developers and site operators to filter traffic beyond the one-size-fits-all approach of Bot Fight Mode. By introducing custom exceptions, administrators were able to whitelist specific verified bots and restore functionality.

Here are the key steps that helped reverse the damage:

1. Identifying Legitimate Bots

Use known IP ranges and user agents published by major search engines and partners to differentiate good bots from unknown traffic. Resources include:

2. Creating IP and UA-Based Exceptions

Using Cloudflare’s Firewall Rules, administrators can create Allow rules for specific user agents and IP ranges. An example rule would be:

(http.user_agent contains "Googlebot") or (ip.src in {known_googlebot_ip_list})

3. Disabling Bot Fight Mode Selectively

While globally disabling Bot Fight Mode may restore functionality, doing so removes protection site-wide. A better approach is to:

- Disable Bot Fight Mode temporarily to assess traffic

- Configure page rules or expression-based rules to only apply Bot Fight Mode where bots are a problem

4. Monitor Logs and Behavior

Continuously audit activity using Cloudflare’s Analytics section. Look for spikes in WAF blocks and narrow the rules to minimize false positives.

Lessons Learned

This incident teaches us the delicate balance required between defense mechanisms and real business operations. While Cloudflare’s Bot Fight Mode is designed as a shield, it must be wielded with precision to avoid collateral damage.

Key takeaways:

- Security tools require customization – Treat default settings as starting points, not permanent solutions.

- Not all bots are bad – Understanding and verifying traffic is essential.

- Monitoring and log analysis are vital – Regular audits help detect misconfigurations early.

- Open communication with partners – Alert critical API consumers about any firewall changes to prevent service disruptions.

Cloudflare itself recognizes the challenge, and over time, it has improved its bot identification mechanisms. But the onus remains on administrators to create smart rules, monitor effects, and adapt as needed.

Conclusion

Protecting your web infrastructure shouldn’t come at the cost of visibility and service reliability. Cloudflare’s Bot Fight Mode offers powerful defenses, but like any formidable tool, its effectiveness depends on how precisely it is configured. For most use cases, introducing firewall exceptions for critical API clients and search crawlers is not just recommended—it’s necessary.

Organizations must continuously evolve their threat models while being vigilant about what good traffic looks like. It’s not about turning off protection—it’s about deploying it with enough granularity to let trusted partners through.

If your site relies on both external APIs and SEO performance (as most modern web apps do), then managing your bot settings proactively is not a luxury—it’s mission-critical.

logo

logo