Transactional emails are a vital component of any digital platform. From password resets to order confirmations, they ensure a seamless user experience. But what happens when your provider throttles your SMTP connection due to a sudden volume spike? This article explores one such real-world case and how a smart buffering strategy helped overcome the bottleneck.

TL;DR

After a sudden spike in outbound transactional emails, the email provider began throttling SMTP connections, leading to delays and even failures. The issue stemmed from breaching rate limits during peak traffic. Implementing a buffering strategy using Redis and a simple queuing logic helped level out the email sends, complying with provider limits and improving deliverability. If you’re facing sporadic delivery and random rate-limiting, buffering might be your best strategy too.

The Incident: A Sudden Surge in Email Volume

It all began when a product feature update coincided with a marketing campaign send. Overnight, the number of transactional emails on the platform skyrocketed—order confirmations, password resets, and delivery notifications all came pouring out of the system in volumes not seen before.

Unfortunately, this spike triggered the automated protective mechanisms of the SMTP provider. The result? The platform began receiving 421 temporary failure responses at an alarming rate. Emails were being postponed, queued, or worse—discarded after repeated retries failed.

The transactional email provider, in an effort to protect its infrastructure, throttles connections by placing limits such as:

- Concurrent connections

- Messages per minute

- Volume in a 24-hour window

Little did the team realize that their default email sending logic was designed only for low to moderate volumes. When the spike occurred, the system flooded the SMTP endpoint, violating most of these constraints.

Initial Troubleshooting

After identifying the 421 response codes in the logs, the engineering team dug deeper. They found:

- Multiple open SMTP connections from parallel processes

- No retry-backoff mechanism on temporary failures

- Zero intelligent pacing logic

The app was behaving like a firehose, trying to blast out 100,000 messages an hour when the SMTP pipe was sized for 10,000.

Understanding the Limitations of SMTP Providers

SMTP providers, even the most robust ones, enforce usage policies to maintain deliverability and infrastructure stability. While many publish general thresholds, they often institute dynamic rate limits based on:

- Sender reputation

- IP warming status

- Domain history

- Spam complaint rates

The team reached out to the provider and confirmed that their account had breached acceptable usage thresholds, triggering the rate-limiting logic.

Implementing a Buffering Strategy

After understanding the nature of the problem, the team knew they couldn’t control how the SMTP provider behaves—but they could control how they send.

Here’s the buffering strategy they implemented:

- Email Queue with Redis: Messages were first written to a FIFO Redis list, acting as the primary buffer.

- Rate-Controlled Workers: Background email workers were designed to dequeue and send only a fixed number of messages per second.

- Failure-Backoff Logic: If a 421 or 4xx error occurred, the message was requeued with an exponential backoff.

- Schedule-Aware Load: The process was tuned to ramp up during off-peak SMTP hours to distribute load better.

This effectively created a reservoir between the application and the SMTP server, smoothing out the spikes and converting burst sends into a steady flow.

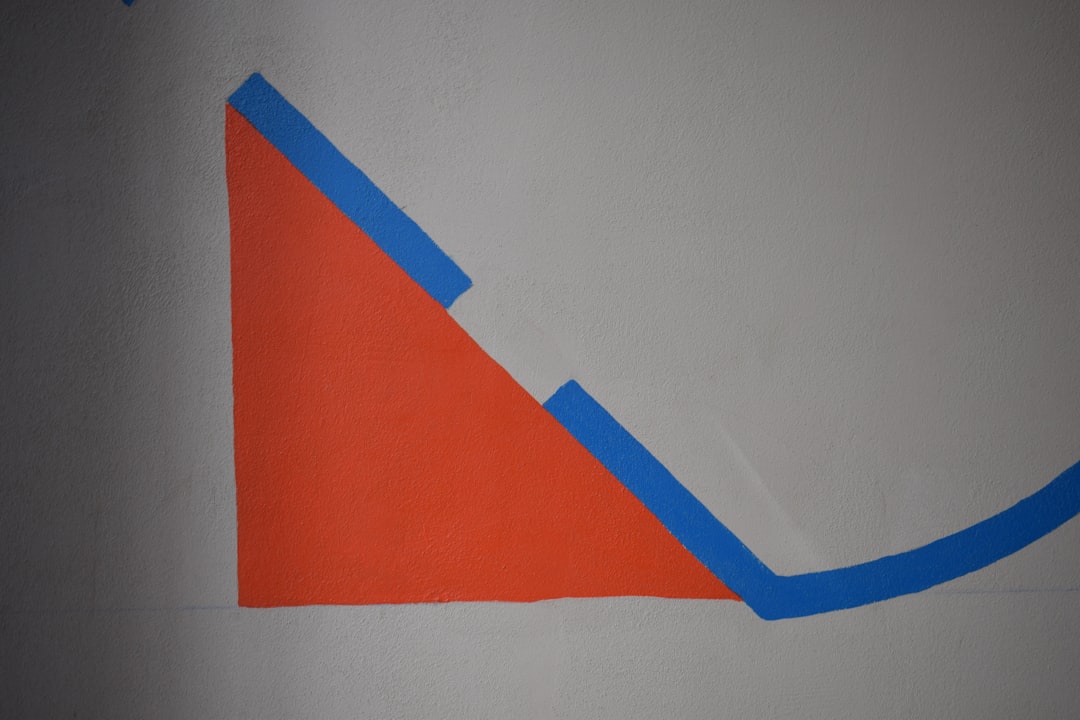

Visualizing the Result

Before the buffer, the system would attempt sending all messages the instant events were triggered. After implementing the buffer:

- SMTP errors dropped by 92% in the first 24 hours

- Email delivery times improved drastically

- System resource usage decreased due to fewer retries and failures

Moreover, the team could monitor the size of the Redis buffer to detect new spikes early. Alerts were set up on queue lengths to flag growing backlogs.

What Tools Were Helpful?

To implement this strategy, the team leaned on a few key technologies:

- Redis: Fast, in-memory data store used for queuing

- Celery / Sidekiq: Worker queues to handle background job dispatch

- Grafana: For visualizing queue length, send rate, and throttle frequency

They also wrote rate-limiting wrappers around send logic to implement delay per request when failures rose above a chosen threshold.

Lessons Learned

The incident served as a wake-up call that:

- SMTP infrastructure is not infinite—respect the provider’s rules

- Bursty traffic needs smoothing before hitting a transactional service

- Backoff and retry control can greatly reduce the effect of transient errors

- Observability (logs and metrics) is non-negotiable in large message systems

More importantly, it taught that treating transactional email dispatch with the same maturity as a financial transaction system pays off in the long run.

Conclusion

Dealing with SMTP throttling can be frustrating, especially during high-traffic windows. However, with a thoughtful buffering strategy, you can turn a spiky, failure-prone setup into a controlled, dependable message pipeline. Redis, retry budgets, and measured pacing turned out to be the winning formula.

If you’re facing unexpected delivery failures during peak times, it’s time to ask: are you sending smart or sending fast?

FAQ

-

What is SMTP throttling?

SMTP throttling occurs when an email provider limits the rate, volume, or concurrency of messages being sent to protect their infrastructure from abuse. -

Why did my provider start throttling suddenly?

Sudden spikes in traffic, high bounce rates, or exceeding your usual send patterns can trigger automated protection mechanisms in your provider’s system. -

What is a buffering strategy?

A buffering strategy involves temporarily storing messages in a queue or intermediate system to control the rate at which they are sent to the final destination. -

How can Redis help with email dispatch?

Redis can function as a high-speed queue for emails, enabling rate-limiting and controlled dequeueing by worker processes to maintain compliance with provider send limits. -

How long does it take to implement such a system?

Depending on infrastructure, a basic buffering system can be implemented in 1-2 days with existing queue/task frameworks like Celery or Bull. Fine-tuning can take longer. -

Is email buffering applicable for marketing newsletters as well?

Yes, while the context here is transactional email, the same principles apply to bulk marketing sends where pacing and throttling management is essential.

logo

logo