It began like any other ordinary day on the backend. Web traffic appeared stable, servers were humming smoothly, and there were no alerts in sight. Until, seemingly out of nowhere, the entire PHP stack on one of our production boxes came to a screeching halt. Web pages failed to load, API calls timed out, and users were left staring at loading spinners. The root cause? A swarm of eager SMTP queue workers had consumed every single one of our available PHP-FPM processes. What followed was a diagnostic dive into resource management, misconfigurations, and the magic of tuning worker limits.

TL;DR

Our server became unresponsive because a high number of background SMTP queue workers consumed all PHP-FPM processes, blocking web requests. These workers themselves invoked PHP scripts for email processing, inadvertently exhausting the shared pool. We resolved it by implementing strict process limits using supervisord, fine-tuning how many workers could run concurrently. System stability returned instantly and taught us a valuable lesson on shared process pools.

Setting the Scene: The Stack in Question

Let’s get a better understanding of the application setup. We were running a fairly common stack:

- Nginx as the web server

- PHP-FPM to handle PHP requests

- Supervisor to manage background workers

- Laravel Queue for handling email and job queues

- SMTP email processing through Laravel’s

Mail::queue

Initially, everything was stable. However, growth in users led to increased activity—including email sends on user sign-ups, password resets, marketing updates, etc. And these email triggers were all routed through Laravel background workers that executed PHP scripts under their own PHP-FPM context.

The Outage: Symptoms and Patterns

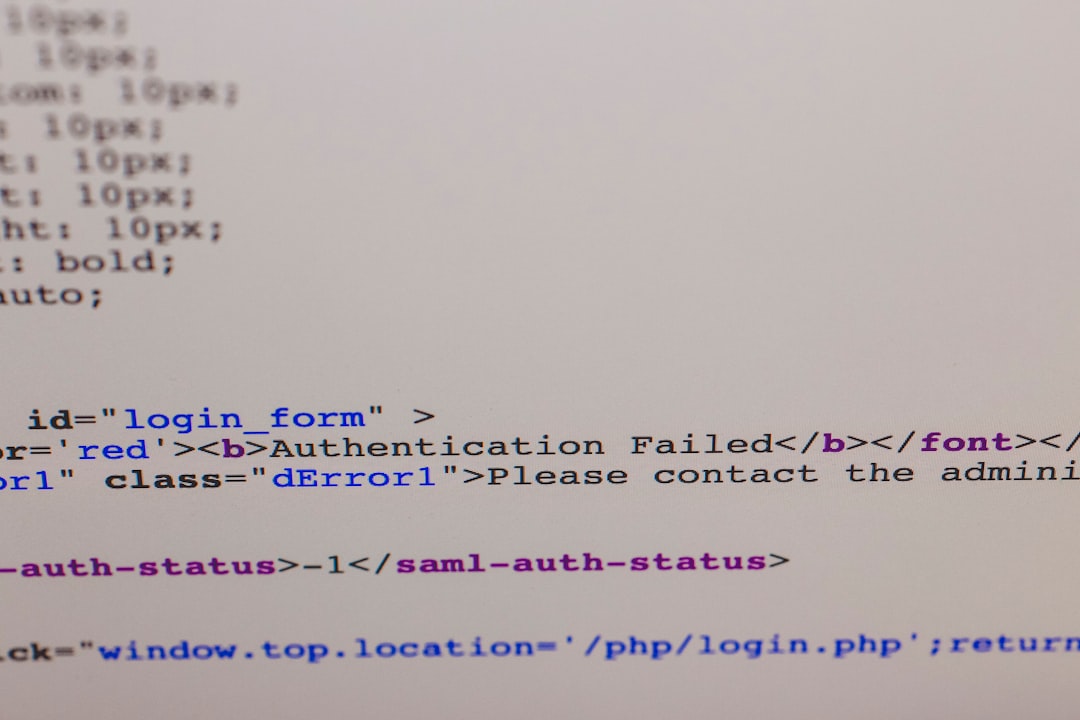

Suddenly, alerts flooded in. Health checks timed out, response times spiked, and web traffic tanked. Logs told a grim story: PHP-FPM had no more available workers. Connection attempts from Nginx were hitting the dreaded “Primary script unknown or failed to open stream” error—an indication it couldn’t connect to FPM at all.

At first, we suspected a traffic spike. But server metrics said otherwise—CPU and memory usage were moderate. It wasn’t until we ran a few diagnostic commands like ps aux | grep php-fpm and netstat -pant that we saw the culprit.

All available PHP-FPM processes were occupied handling queue:work commands. These weren’t incoming HTTP requests—they were internal backend jobs initiated by SMTP queue workers, all of which were PHP scripts that consumed FPM workers just like any API call.

The Root Cause: Workers Starved the Web

The Laravel queue system was configured to fire emails on specific application events. These jobs were enqueued properly, but when supervisord spun up multiple worker processes to handle the queue, they in turn loaded PHP, connected back via PHP-FPM, and consumed one FPM process at a time.

Here’s the twist: our PHP-FPM pool had no isolation between ‘web’ processes and ‘worker’ processes. Both drew from the same pool of 20 workers. Hence, when the queue got flooded and workers tried to parallelize job execution, they created a denial-of-service situation on our own app.

The system log was full of entries like:

[WARNING] [pool www] server reached max_children setting (20), consider raising it

And raising it, as tempting as it was, would only kick the can further down the road. Resource starvation would still occur—just later, and less predictably.

Diagnosis and Remedies Explored

We explored several solutions:

- Increasing PHP-FPM worker count — briefly considered, but rejected due to memory constraints and risk of worsening resource abuse.

- Separating FPM pools — promising, but would require significant architectural changes.

- Limiting queue worker concurrency — the cleanest, fastest fix.

Ultimately, the root of the issue was unbounded queue workers. At times of high email load, they tried to run too many jobs at once, indirectly exhausting limited FPM resources.

The Fix: Taming the Worker Herd

We zeroed in on the configuration of supervisord, the service managing our Laravel queue workers. Previously, we had configured it something like this:

[program:laravel-worker]

process_name=%(program_name)s_%(process_num)02d

command=php /var/www/app/artisan queue:work --sleep=3 --tries=3

numprocs=8

autostart=true

autorestart=true

Eight worker processes, each capable of spawning a PHP-FPM thread. Given a 20 process ceiling in PHP-FPM, it was entirely possible for eight workers operating concurrently or sluggishly to claim nearly all the slots, especially when jobs lingered due to SMTP timeouts or retries.

We dialed down numprocs to 2 and added the --max-jobs and --timeout flags to ensure that long-running or hanging jobs wouldn’t freeze a worker indefinitely:

[program:laravel-worker]

process_name=%(program_name)s_%(process_num)02d

command=php /var/www/app/artisan queue:work --sleep=3 --tries=3 --timeout=60 --max-jobs=50

numprocs=2

autostart=true

autorestart=true

Additionally, we configured queue prefetching options to limit how many jobs each worker could grab simultaneously. Laravel handled this well post v8 using --max-jobs and --delay.

Aftermath: Proof of Stabilization

With the new configuration in place, the server began to breathe again. PHP-FPM logs settled down. Web requests were immediately routed and executed on time. Queued emails still went out, just slightly slower, and more importantly—without dragging down the frontend.

Metrics told the rest of the story. Here’s what changed:

- Average PHP-FPM usage: dropped from near 100% to a stable 40-50%

- Web latency: decreased from 3-5s timeouts to < 200ms

- Server load averages: smoothed out without fluctuations

Users didn’t notice any slowdown in email delivery. Even if an email took an extra five seconds to send, it was far preferable to the web app becoming inaccessible.

Lessons Learned

This incident taught us a few crucial lessons about distributed server architectures and resource sharing:

- PHP-FPM is a finite pool – treat it as such.

- Background workers should not starve core services – rate-limit them where needed.

- Monitoring isn’t enough; enforce caps – both on queue consumption and FPM capacity.

- Distinguish between workloads – if mixing workers and web, consider isolated FPM pools or containers.

Final Thoughts

While we resolved our specific crisis through configuration tuning and by limiting Laravel’s worker concurrency, the bigger insight was architectural: shared process pools create hidden dependencies. In high-load systems, components interact in unexpected ways, and one seemingly isolated queue can domino into a full-blown outage.

Designing for resilience means anticipating not just external failures, but internal ones—where poorly bounded background work can take down frontend performance. We may eventually migrate to containerized workers or separate job servers, but until then, we’ve at least ensured PHP-FPM won’t become a bottleneck again.

And it all started with some over-eager email queue workers.

logo

logo